Ubiquitous Computing

David Naylor, President, University of Toronto

Contributing to the Social Issues in Computing blog has caused a subacute exacerbation of my chronic case of impostor syndrome. I am, after all, a professor of medicine treading in the digital backyard of the University’s renowned Department of Computer Science [CS].

That said, there are three reasons why I am glad to have been invited to contribute.

First, with a few weeks to go before I retire from the President’s Office, it is a distinct privilege for me to say again how fortunate the University has been to have such a remarkable succession of faculty, staff, and students move through CS at U of T.

Second, this celebration of the 40th anniversary of Social Issues in Computing affords me an opportunity to join others in acknowledging Kelly Gotlieb, visionary founder of the Department, and Allan Borodin, a former Department chair who is renowned worldwide for his seminal research work.

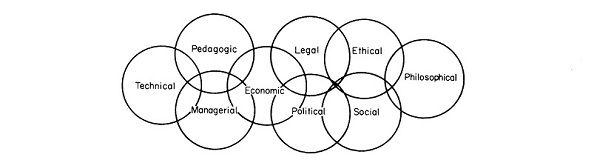

Third, it seems to me that Social Issues in Computing both illustrated and anticipated what has emerged as a great comparative advantage of our university and similar large research-intensive institutions world-wide. We are able within one university to bring together scholars and students with a huge range of perspectives and thereby make better sense of the complex issues that confront our species on this planet. That advantage obviously does not vitiate the need to collaborate widely. But it does make it easier for conversations to occur that cross disciplinary boundaries.

All three of those themes were affirmed last month when two famous CS alumni were awarded honorary doctorates during our Convocation season. Dr Bill Buxton and Dr Bill Reeves are both in their own ways heirs to the intellectual legacy of Gotlieb, Borodin, and many other path-finders whom they both generously acknowledged at the events surrounding their honorary degrees. Among those events was a memorable celebratory dinner at the President’s Residence, complete with an impromptu performance by a previous honorary graduate — Dr Paul Hoffert, the legendary musician and composer, who is also a digital media pioneer. But the highlight for many of us was a stimulating conversation at the MaRS Centre featuring the CS ‘Double Bill’, moderated by another outstanding CS graduate and faculty member, Dr Eugene Fiume.

Drs Buxton and Reeves were part of a stellar group of University of Toronto CS graduate students and faculty back in the 1970s and 1980s. At that time, the Computer Science Department, and perhaps especially its Dynamic Graphics Project, was at the very heart of an emerging digital revolution.

Today, Dr Buxton is Principal Researcher at Microsoft and Dr Reeves is Technical Director at Pixar Animation Studios. The core businesses of those two world-renowned companies were unimaginable for most of us ordinary mortals forty years ago when Social Issues in Computing was published. Human-computer interaction was largely conducted through punch cards and monochrome text displays. Keyboards, colour graphical display screens, and disk drives were rudimentary and primitive. Indeed, they were still called ‘peripherals’ and one can appreciate their relative status in the etymology of the word. The mouse and the graphical user interface, justly hailed as advances in interface design, were steps in the right direction. But many in U of T’s CS department and their industry partners saw that these were modest steps at best, failing to sufficiently put the human in human-computer interaction. Toronto’s CS community accordingly played a pivotal role in shaping two themes that defined the modern digital era. The first was the primacy of user experience. The second was the potential for digital artistry. From Alias / Wavefront / Autodesk and Maya to multi-touch screens, breakthroughs in computer animation and Academy Award-winning films, Toronto’s faculty, staff, students and alumni have been at the forefront of humanized digital technology.

What will the next forty years bring? The short answer is that I have no idea. We live in an era of accelerating change that is moving humanity in directions that are both very exciting and somewhat unsettling. I’ll therefore take a shorter-term view and, as an amateur, offer just a few brief observations on three ‘Big Things’ in the CS realm.

First and foremost, we seem to have entered the era of ubiquitous computing. Even if the physical fusion occurs only rarely (e.g. externally programmable cardiac pacemakers), in a manner of speaking we are all cyborgs now. Our dependency on an ever widening range of digital devices borders on alarming, and evidence of the related threats to privacy continues to grow. However, the benefits have also been incalculable in terms of safety, convenience, relief from drudgery, productivity and connectivity. At the centre of this human-computer revolution has been the rise of mobile computing – and the transformation of the old-fashioned cell phone into a powerful hand-held computer. Add in tablets, notebooks, and ultra-lightweight laptops and the result is an intensity of human-computer interaction that is already staggering and growing exponentially. The trans-disciplinary study of the social and psychological implications of this shift in human existence will, I hope, remain a major priority for scholars and students the University of Toronto in the years ahead as others follow the lead of Gotlieb, Borodin and their collaborators.

A second topic of endless conversation is ‘The Cloud’. I dislike the term, not because it is inaccurate, but precisely because it aptly captures a new level of indifference to where data are stored. I cannot fully overcome a certain primitive unease about the assembly of unthinkable amounts of data in places and circumstances about which so little is known. Nonetheless, the spread of server farms and growth of virtual machines are true game-changers in mobile computing. Mobility on its own promotes ubiquity but leads to challenges of integration – and it is the latter challenge that has been addressed in part by cloud-based data storage and the synergy of on-board and remote software.

A third key element, of course, is the phenomenon known as ‘Big Data’. The term seems to mean different things to different audiences. Howsoever one defines ‘Big Data’, the last decade has seen an explosion in the collection of data about everything. Ubiquitous computing and the rise of digitized monitoring and logging has meant that human and automated mechanical activity alike are creating on an almost accidental basis a second-to-second digital record that has reached gargantuan proportions. We have also seen the emergence of data-intensive research in academe and industry. Robotics and digitization have made it feasible to collect more information in the throes of doing experimental or observational research. And the capacity to store and access those data has grown apace, driving the flywheel faster.

Above all, we have developed new capacity to mine huge databases quickly and intelligently. Here it is perhaps reasonable to put up a warning flag. There is, I think, a risk of the loss of some humanizing elements as Big Data become the stock in trade for making sense of our world. Big Data advance syntax over semantics. Consider: The more an individual’s (or a society’s) literary preferences can be reduced to a history of clicks – in the form of purchases at online bookstores, say – the less retailers and advertisers (perhaps even publishers, critics, and writers) might care about understanding those preferences. Why does someone enjoy this or that genre? Is it the compelling characters? The fine writing? The political engagement? The trade-paperback format?

On this narrow view, understanding preferences does not matter so much as cataloguing them. Scientists, of course, worry about distinguishing causation from correlation. But why all the fuss about root causes, the marketing wizards might ask: let’s just find the target, deliver the message, and wait for more orders. Indeed, some worry that a similar indifference will afflict science, with observers like Chris Anderson, past editor of Wired, arguing that we may be facing ‘the end of theory’ as ‘the data deluge makes the scientific method obsolete’. I know that in bioinformatics, this issue of hypothesis-free science has been alive for several years. Moreover, epidemiologists, statisticians, philosophers, and computer scientists have all tried to untangle the changing frame of reference for causal inference and, more generally, what ‘doing science’ means in such a data-rich era.

On a personal note, having dabbled a bit in this realm (including an outing in Statistics in Medicine as the data broker for another CS giant, Geoff Hinton and a still-lamented superstar who moved south, Rob Tibshirani), I remain deeply uncertain about the relative merits and meanings of these different modes of turning data into information, let alone deriving knowledge from the resulting information.

Nonetheless, I remain optimistic. And in that regard, let me take my field, medicine, as an example. To paraphrase William Osler (1849-1919), one of Canada’s best-known medical expatriates, medicine continues to blend the science of probability with the art of uncertainty. The hard reality is that much of what we do in public health and clinical practice involves educated guesses. Evidence consists in effect sizes quantifying relative risks or benefits, based on population averages. Yet each individual patient is unique. Thus, for each intervention – be it a treatment of an illness or a preventive manoeuver, many individuals must be exposed to the risks and costs of intervention for every one who benefits. Eric Topol, among others, has argued that new biomarkers and monitoring capabilities mean that we are finally able to break out of this framework of averages and guesswork. The convergence of major advances in biomedical science, ubiquitous computing, and massive data storage and processing capacity, has meant that we are now entering a new era of personalized or precision medicine. The benefits should include not only more effective treatments with reduced side-effects from drugs. The emerging paradigm will also enable customization of prevention, so that lifestyle choices – including diet and exercise patterns – can be made with a much clearer understanding of the implications of those decisions for downstream risk of various disease states.

We are already seeing a shift in health services in many jurisdictions through adoption of virtual health records that follow the patient, with built-in coaching on disease management. Combine these records with mobile monitoring and biofeedback and there is tremendous potential for individuals to take greater responsibility for the management of their own health. There is also the capacity for much improved management of the quality of health services and more informed decision-making by professionals and patients alike.

All this, I would argue, is very much in keeping with ubiquitous computing as an enabler of autonomy, informed choice, and human well-being. It is also entirely in the spirit of the revolutionary work of many visionaries in CS at the University of Toronto who first began to re-imagine the roles and relationships of humans and computers. Here, I am reminded that Edmund Pellegrino once described medicine as the most humane of the sciences and the most scientific of the humanities. The same could well be said for modern computer science – a situation for which the world owes much to the genius of successive generations of faculty, staff and students in our University’s Department of Computer Science.

Selected references:

“A comparison of statistical learning methods on the Gusto database.”

Ennis M, Hinton G, Naylor D, Revow M, Tibshirani R.

Stat Med. 1998 Nov 15;17(21):2501-8.

“Predicting mortality after coronary artery bypass surgery: what do artificial neural networks learn? The Steering Committee of the Cardiac Care Network of Ontario.”

Tu JV, Weinstein MC, McNeil BJ, Naylor CD.

Med Decis Making. 1998 Apr-Jun;18(2):229-35.

“A comparison of a Bayesian vs. a frequentist method for profiling hospital performance.”

Austin PC, Naylor CD, Tu JV.

J Eval Clin Pract. 2001 Feb;7(1):35-45.

“Grey zones of clinical practice: some limits to evidence-based medicine.”

Naylor CD.

Lancet. 1995 Apr 1;345(8953):840-2.